In today’s digital landscape, protecting your content, data, and web traffic is of utmost importance. With the emergence of ChatGPT and other AI language models, there is a growing concern about these tools scraping content for their own benefit. As a publisher, it is essential to understand how these bots operate and the potential risks they pose.

In this article, we will explore the world of ChatGPT and LLM scraping and discuss strategies to outsmart and protect your website traffic.

ChatGPT, various plugins, and other language models have gained star status due to their remarkable ability to generate human-like text. These tools have taken over and laid off employees from various industries, including content creation, customer service, and even creative writing. While they can be helpful in many ways, it is crucial to understand that there are cases where they can be misused for scraping content.

Scraping, in the context of the internet, refers to the process of extracting information from websites using automated bots. These bots visit different web pages, collect data, and use it to train AI models further. When ChatGPT or other LLMs scrape content, they essentially utilize the data they gather to enhance their text generation capabilities.

However, the main problem arises when this scraping process infringes upon intellectual property rights and hurts the business of publishers and media organizations. It is essential to be aware of how your content can be scraped and its potential consequences on your web traffic.

One of the primary issues with scraping is that it can lead to losing control over your content. When your website’s content is scraped, it can be repurposed and distributed elsewhere without your consent. This undermines your authority as the original creator and poses challenges in maintaining the accuracy and integrity of your content.

Scraping can have a significant impact on your website’s search engine rankings. Search engines prioritize original and unique content, and when scraped content is published elsewhere, it can dilute the visibility and relevance of your website in search results. This can result in a decrease in organic traffic and potential revenue loss.

It can also lead to a distorted representation of your brand or organization. When scraped content is used inappropriately or out of context, it can misrepresent your intentions, leading to confusion or even damage to your reputation. Protecting your brand identity and ensuring that your content is used responsibly and with proper attribution is crucial.

Addressing the issue of scraping requires a multi-faceted approach. As a content creator or website owner, you can take several measures to protect your content from being scraped. Implementing technologies like CAPTCHA, IP blocking, or content access restrictions can help deter automated bots from accessing and scraping your website.

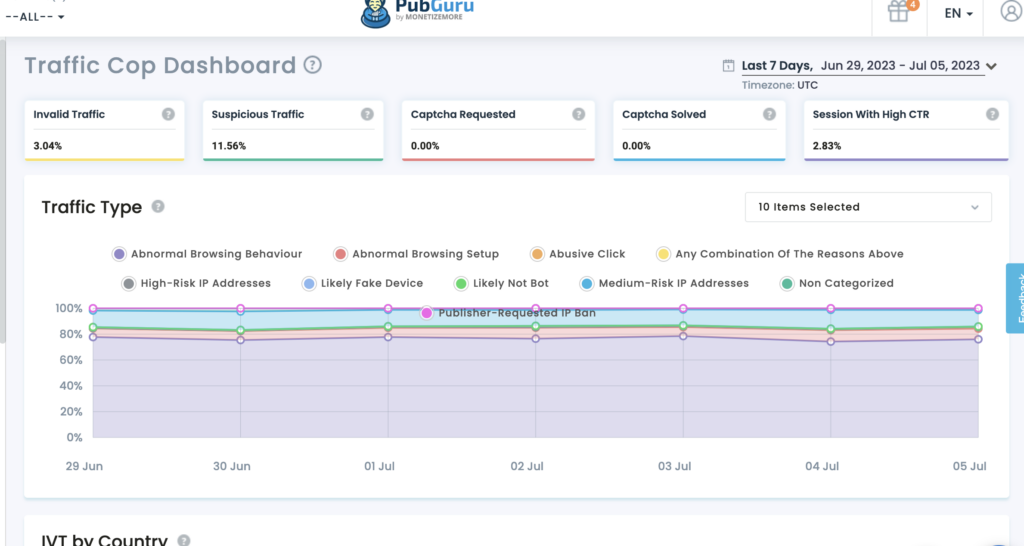

Additionally, regularly monitoring your website’s traffic and analyzing user behavior can help identify suspicious activities that may indicate scraping. By staying vigilant and proactive, you can take appropriate action to mitigate the impact of scraping on your content and business.

It is also essential for AI developers and organizations utilizing LLMs like ChatGPT to prioritize ethical considerations. Implementing strict guidelines and policies that discourage the misuse of scraping can help prevent the unauthorized gathering of content. Responsible AI development aims to strike a balance between innovation and respect for intellectual property rights.

Understanding the scraping process employed by ChatGPT bots is crucial in dealing with this issue effectively. These bots use a technique known as web crawling, where they visit web pages and follow links to collect information. This information is then utilized to train the AI model. Scraping content can be harmful to publishers and media businesses for several reasons.

First, it can lead to a loss of revenue if scraped content is republished without permission. This can undermine the original publishers’ ability to monetize their content and result in financial losses. Additionally, it can tarnish the reputation of the publishers, as their content may be misrepresented or taken out of context when republished by ChatGPT bots.

Second, the impact on user experience is a significant concern. When ChatGPT bots flood a website with requests to scrape content, it can overload the server and slow down the loading speed for legitimate users. This can frustrate visitors and discourage them from returning to the website, leading to a decrease in traffic and potential loss of engagement and ad revenue.

Lastly, scraped content may also affect a website’s search engine rankings. Search engines prioritize unique and original content, penalizing websites with duplicated or scraped content. If ChatGPT bots scrape and republish content from publishers, it can negatively impact the original creators’ search engine optimization (SEO) efforts. This can result in lower visibility and reduced organic traffic, harming publishers’ online presence.

Given these risks, publishers must proactively protect their content and web traffic from ChatGPT and LLM scraping. Implementing measures such as CAPTCHAs, IP blocking, and user agent detection can help identify and block automated bots attempting to scrape content. Additionally, regularly monitoring web traffic and analyzing patterns can help detect any abnormal scraping activities and take appropriate action.

Furthermore, publishers can explore legal avenues to protect their content. Copyright laws and intellectual property rights can be enforced to prevent unauthorized scraping and republishing of their work. Seeking legal advice and taking necessary legal action against infringing entities can send a strong message and deter future scraping attempts.

Collaboration and communication among publishers, media businesses, and AI developers are also crucial in addressing this issue. Establishing partnerships and open dialogues can lead to developing ethical practices and guidelines for AI training, ensuring that content creators’ rights are respected while advancing AI technology.

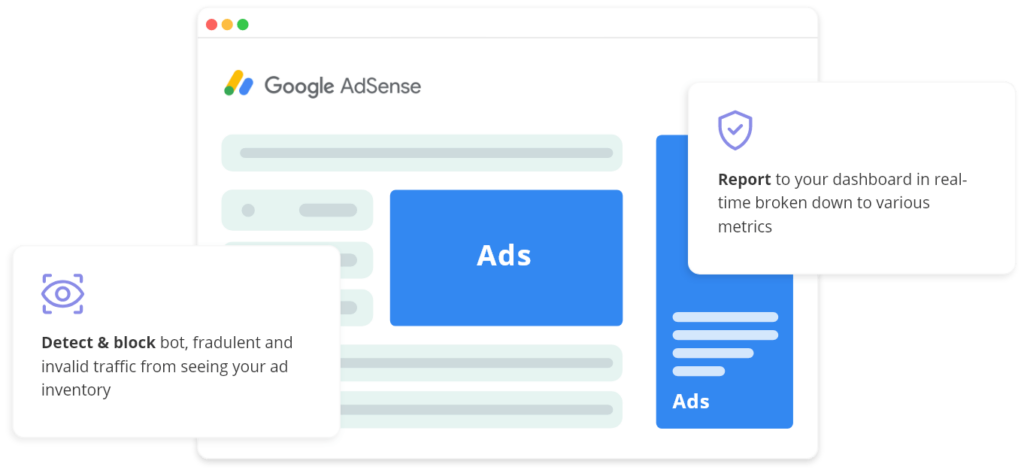

MonetizeMore’s Traffic Cop is a cutting-edge solution that acts as a robust shield, effectively safeguarding your digital assets from scraping bots and unauthorized access.

Protecting Your Content: With Traffic Cop, your valuable content remains secure from unauthorized scraping. Its advanced machine-learning algorithms enable it to identify and differentiate between legitimate user traffic and malicious bots. Traffic Cop effectively blocks scraping attempts, ensuring your content remains exclusive to your website.

Securing Your Data: Data is the lifeblood of online businesses. Traffic Cop helps you maintain the integrity and confidentiality of your data. The award-winning tool employs sophisticated algorithms to identify and block data harvesting activities, ensuring your data stays protected.

Preserving Web Traffic: Web traffic is essential for the success of any online venture. However, unauthorized scraping can deplete your resources, affect site performance, and undermine your SEO efforts. Traffic Cop is your vigilant sentinel, keeping malicious bots at bay and preserving your web traffic. Implementing advanced bot detection mechanisms and proactive measures ensures that genuine users have uninterrupted access to your website, enhancing user experience and maximizing your conversion potential.

Utilize rate limiting: Implement rate-limiting measures to restrict the number of requests per IP address or user, preventing bots from overwhelming your website with scraping attempts.

Rate limiting is an effective technique to prevent scraping attacks by limiting the number of requests a user or IP address can make within a specific time frame. By setting reasonable limits, you can ensure that genuine users can access your website while discouraging bots from attempting to scrape your content. Implementing rate limiting can help maintain the performance and availability of your website.

Protect your RSS feeds: If you provide RSS feeds for syndication, ensure they are protected against scraping by adding authentication mechanisms or implementing API keys.

RSS feeds can be a valuable source of content syndication, but they can also be targeted by scraping bots. To protect your RSS feeds, it is crucial to implement authentication mechanisms or API keys. By requiring authentication, you can ensure that only authorized users or applications can access and consume your RSS feeds. Additionally, implementing API keys can help you track and control access to your feeds, preventing unauthorized scraping attempts.

By implementing these strategies, you can significantly reduce the risk of ChatGPT and LLM scraping, thereby safeguarding your content, data, and web traffic.

As ChatGPT and other LLMs continue to advance, publishers need to consider certain key aspects to protect their content and web traffic:

By proactively considering these factors, publishers can better protect their content, data, and web traffic in the age of ChatGPT and emerging LLM technologies.

In addition to these key considerations, publishers should also be aware of the potential impact of ChatGPT and LLMs on their audience engagement. With the rise of AI-generated content, there is a possibility that readers may become more skeptical of the authenticity and reliability of the information they consume. Publishers should take steps to build trust with their audience by clearly labeling AI-generated content and providing transparency about the source of the information.

Another important consideration is the potential ethical implications of AI-generated content. As LLMs become more advanced, there is a need to ensure that the content generated does not perpetuate biases or misinformation. Publishers should establish guidelines and review processes to ensure AI-generated content aligns with their editorial standards and values.

Moreover, be mindful of the evolving legal landscape surrounding AI-generated content. As these technologies become more prevalent, new regulations and legal frameworks may be put in place to govern their use. Staying abreast of these developments and consulting with legal experts can help publishers navigate the legal complexities of AI-generated content.

Safeguarding your content, data, and web traffic from ChatGPT scraping is crucial in maintaining the integrity of your business. It threatens their revenue and user experience and affects their online visibility and reputation. By understanding the scraping process, implementing protective measures, exploring legal options, and fostering collaboration, publishers can safeguard their content and mitigate the risks associated with AI-driven scraping.

MonetizeMore’s Traffic Cop offers a comprehensive solution to keep your content, data, and web traffic safe. By leveraging advanced bot detection and proactive security measures, Traffic Cop enables you to focus on your core business while safeguarding your digital assets effectively.

With over ten years at the forefront of programmatic advertising, Aleesha Jacob is a renowned Ad-Tech expert, blending innovative strategies with cutting-edge technology. Her insights have reshaped programmatic advertising, leading to groundbreaking campaigns and 10X ROI increases for publishers and global brands. She believes in setting new standards in dynamic ad targeting and optimization.

10X your ad revenue with our award-winning solutions.